|

I am a Postdoctoral Scholar at the Berkeley AI Research (BAIR) Lab, and a Research Scientist at the MIT-IBM Watson AI Lab. I work with Prof. Trevor Darrell on Structured Physical Intelligence, and closely collaborates with Prof. Jitendra Malik, Deva Ramanan, and Shankar Sastry. I design architectures and learning algorithms for Physical AI, grounded in structural priors. My work develops physical inductive biases and structured world representations for general-purpose agents, using robots as the ultimate testbed. Previously, I received my Ph.D. from Tel Aviv University, advised by Prof. Amir Globerson; I have an Erdős number of 3. I graduated magna cum laude with M.Sc. (CS), B.Sc. (CS), and B.Sc. (Physics).

Currently on the academic job market. Email / Twitter / BlueSky / Github / LinkedIn / CV / Google Scholar |

Short Bio |

Short Research Statement |

|

|

|

|

|

James Ni*, Zekai Wang*, Wei Lin*, Amir Bar*, Yann LeCun†, Trevor Darrell†, Jitendra Malik†, Roei Herzig† Technical Report, 2025 project page / bibtex / social media We developed a generalist tracking policy that enables a humanoid to mimic human actions from noisy, generated videos in a zero-shot manner. |

|

Chancharik Mitra*, Yusen Luo*, Raj Saravanan*, Dantong Niu, Anirudh Pai, Jesse Thomason, Trevor Darrell, Abrar Anwar, Deva Ramanan, Roei Herzig Technical Report, 2025 project page / bibtex / social media We introduce Robotic Steering, a finetuning approach grounded in mechanistic interpretability that leverages few-shot demonstrations to identify and selectively finetune task-specific attention heads aligned with the physical, visual, and linguistic requirements of robotic tasks. |

|

Dantong Niu*, Yuvan Sharma*, Baifeng Shi*, Rachel Ding, Matteo Gioia, Haoru Xue, Henry Tsai, Konstantinos Kallidromitis, Anirudh Pai, Shankar Shastry†, Trevor Darrell†, Jitendra Malik†, Roei Herzig† International Conference on Learning Representations (ICLR), 2026 project page / bibtex / social media Training robots on random toys enables zero-shot grasping of real-world objects. |

|

Wen-Han Hsieh, Elvis Hsieh, Dantong Niu, Trevor Darrell, Roei Herzig, David M. Chan Conference on Empirical Methods in Natural Language Processing (EMNLP) , 2025 project page / code / bibtex / social media IVA is a unified framework for Vision-Language-Action models that detects false-premise instructions, clarifies them in language, and acts safely—improving robustness while preserving task performance. |

|

Dantong Niu*, Yuvan Sharma*, Haoru Xue, Giscard Biamby, Junyi Zhang, Ziteng Ji, Trevor Darrell†, Roei Herzig† International Conference on Machine Learning (ICML) , 2025 project page / code / bibtex / social media We propose ARM4R, an Autoregressive Robotic Model that leverages low-level 4D Representations learned from human video data to yield a better robotic model. |

|

Yida Yin*, Zekai Wang*, Yuvan Sharma, Dantong Niu, Trevor Darrell, Roei Herzig International Conference on Robotics and Automation (ICRA), 2025 project page / code / bibtex / social media We introduce RoboPrompt, a framework that enables off-the-shelf text-only LLMs to directly predict robot actions through ICL without training. |

|

Dantong Niu*, Yuvan Sharma*, Giscard Biamby, Jerome Quenum, Yutong Bai, Baifeng Shi, Trevor Darrell†, Roei Herzig† Conference on Robot Learning (CoRL), 2024 project page / code / bibtex / social media We propose LLARVA, a model trained with a novel instruction tuning method that leverages structured prompts to unify a range of robotic configurations and introduces the concept of visual traces to further align the vision and action spaces. |

|

|

|

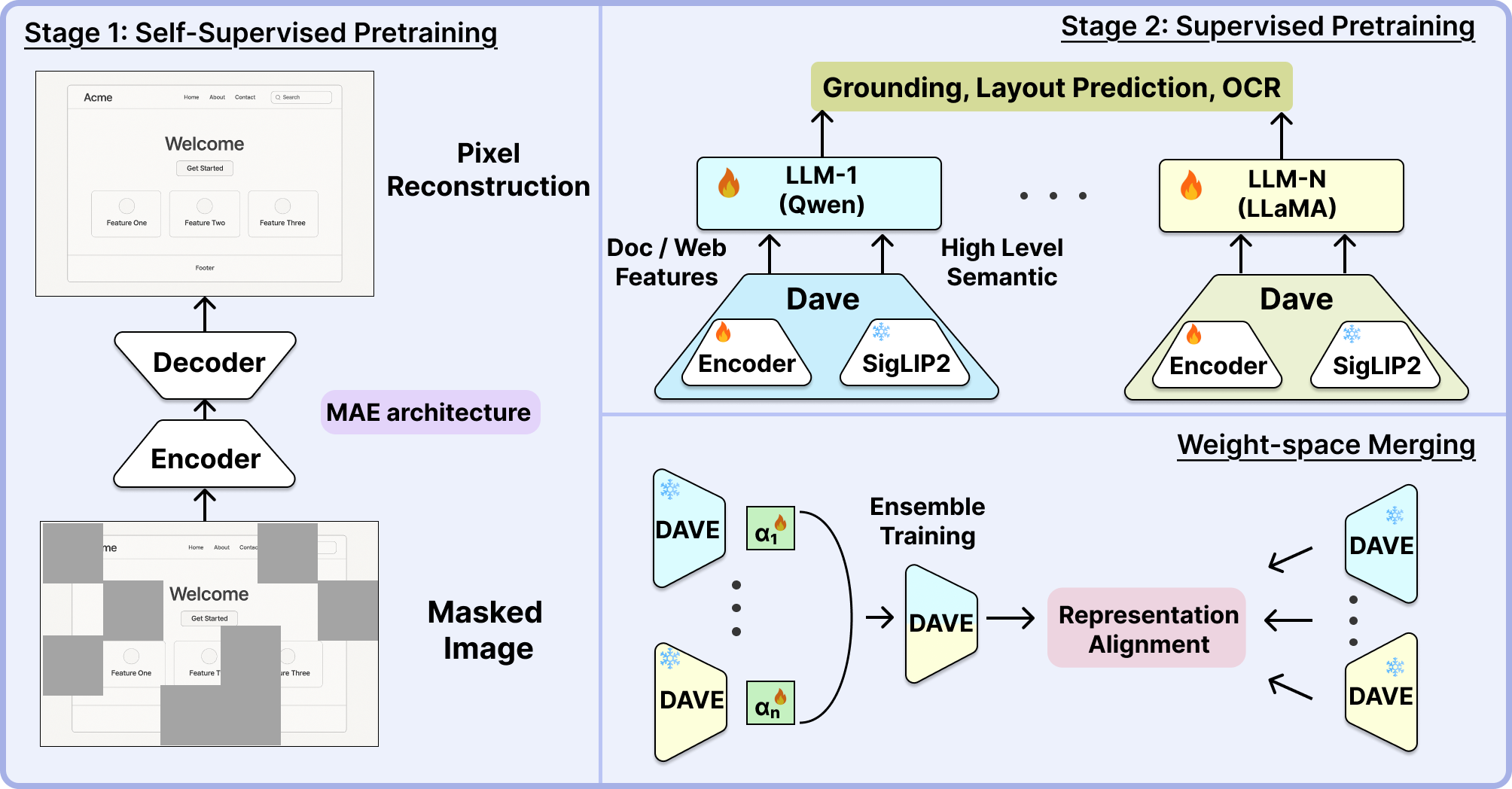

Brandon Huang, Hang Hua, Zhuoran Yu, Trevor Darrell, Rogerio Feris†, Roei Herzig† International Conference on Learning Representations (ICLR), 2026 We introduce DAVE, a vision encoder purpose-built for VLMs and tailored for document understanding and web agents. |

|

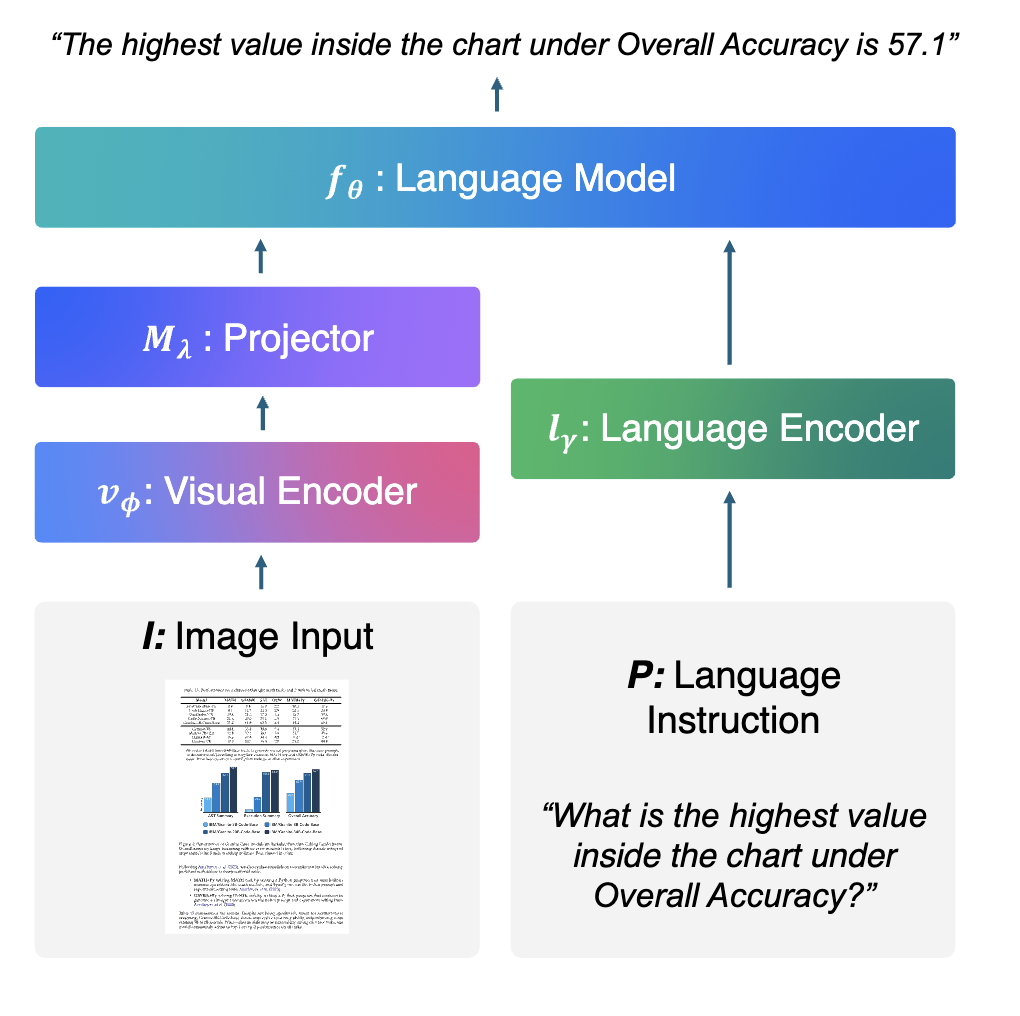

Chancharik Mitra*, Brandon Huang*, Tianning Chai, Zhiqiu Lin, Assaf Arbelle, Rogerio Feris, Leonid Karlinsky, Trevor Darrell, Deva Ramanan, Roei Herzig International Conference on Computer Vision (ICCV), 2025 project page / code / bibtex / social media SAVs is a finetuning-free method that leverages sparse attention head activations (fewer than 5% of heads) in LMMs as powerful feature representations for vision-language classification tasks, achieving state-of-the-art performance compared to both few-shot and finetuned baselines. |

|

Core Contributors by alphabetical order: Ahmed Nassar, Amit Alfassi, Bo Wu, Eli Schwartz, Dhiraj Joshi, Jovana Kondic, Nimrod Shabtay, Pengyuan Li, Roei Herzig, Shafiq Abedin, Shaked Perek, Sivan Harary, Udi Barzelay Technical Report, 2025 Hugging Face / social media We introduce Granite Vision, a lightweight large language model with vision capabilities, specifically designed to excel in enterprise use cases, particularly in visual document understanding |

|

Brandon Huang*, Chancharik Mitra*, Assaf Arbelle, Leonid Karlinsky, Trevor Darrell, Roei Herzig Advanced in Neural Information Processing Systems (NeurIPS) , 2024 code / bibtex / social media We demonstrate the existence of multimodal task vectors--compact implicit representations of many-shot in-context examples compressed in the model’s attention head-- and leverage them for many-shot in-context learning in LMMs. |

|

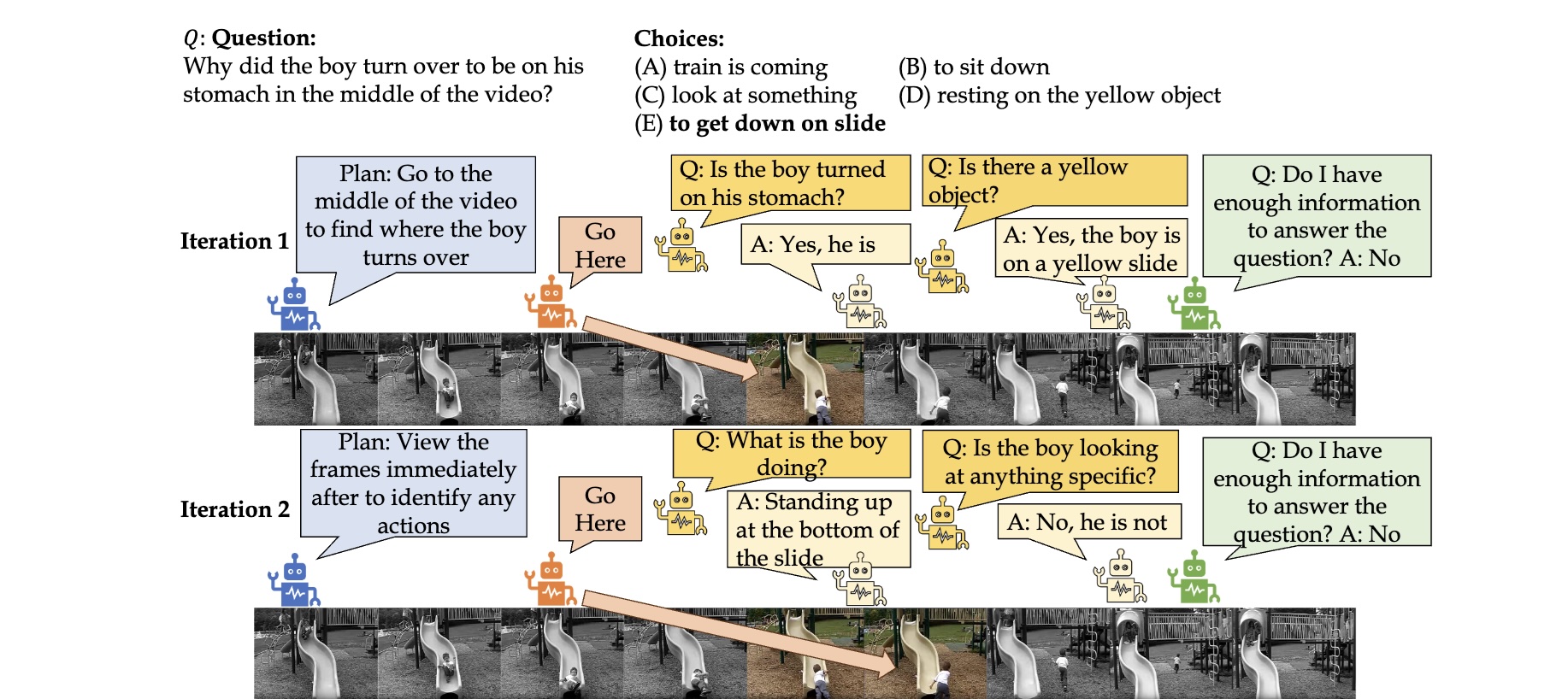

Chuyi Shang*, Amos You*, Sanjay Subramanian, Trevor Darrell, Roei Herzig Conference on Empirical Methods in Natural Language Processing (EMNLP) , 2024 project page / code / bibtex We present TraveLER, a modular multi-LMM agent framework for video question-answering that does not require task-specific fine-tuning or annotations. Through interactive question-asking using several agents with different roles, our framework aims to answer the question by collecting relevant information from keyframes. |

|

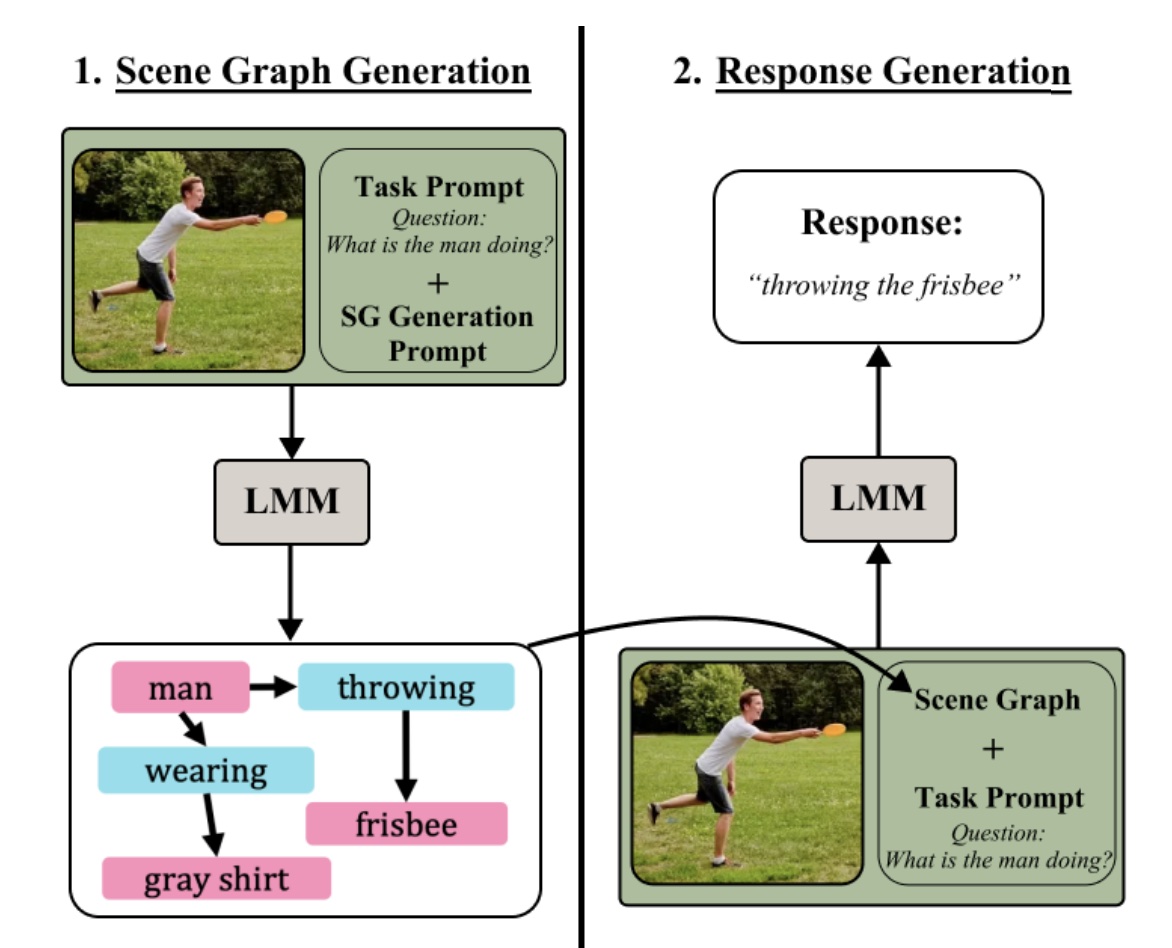

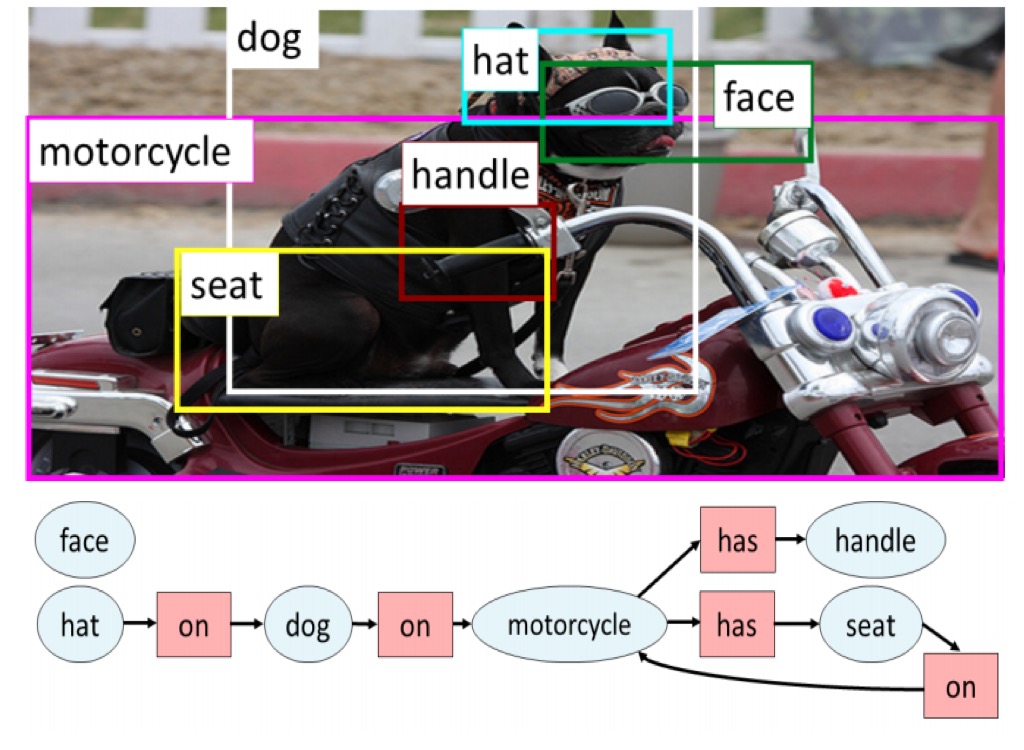

Chancharik Mitra, Brandon Huang, Trevor Darrell, Roei Herzig IEEE Conf. on Computer Vision and Pattern Recognition (CVPR) , 2024 code / bibtex We propose Compositional Chain-of-Thought (CCoT), a novel zero-shot Chain-of-Thought prompting method that utilizes scene graph representations in order to extract compositional knowledge from an LMM. |

|

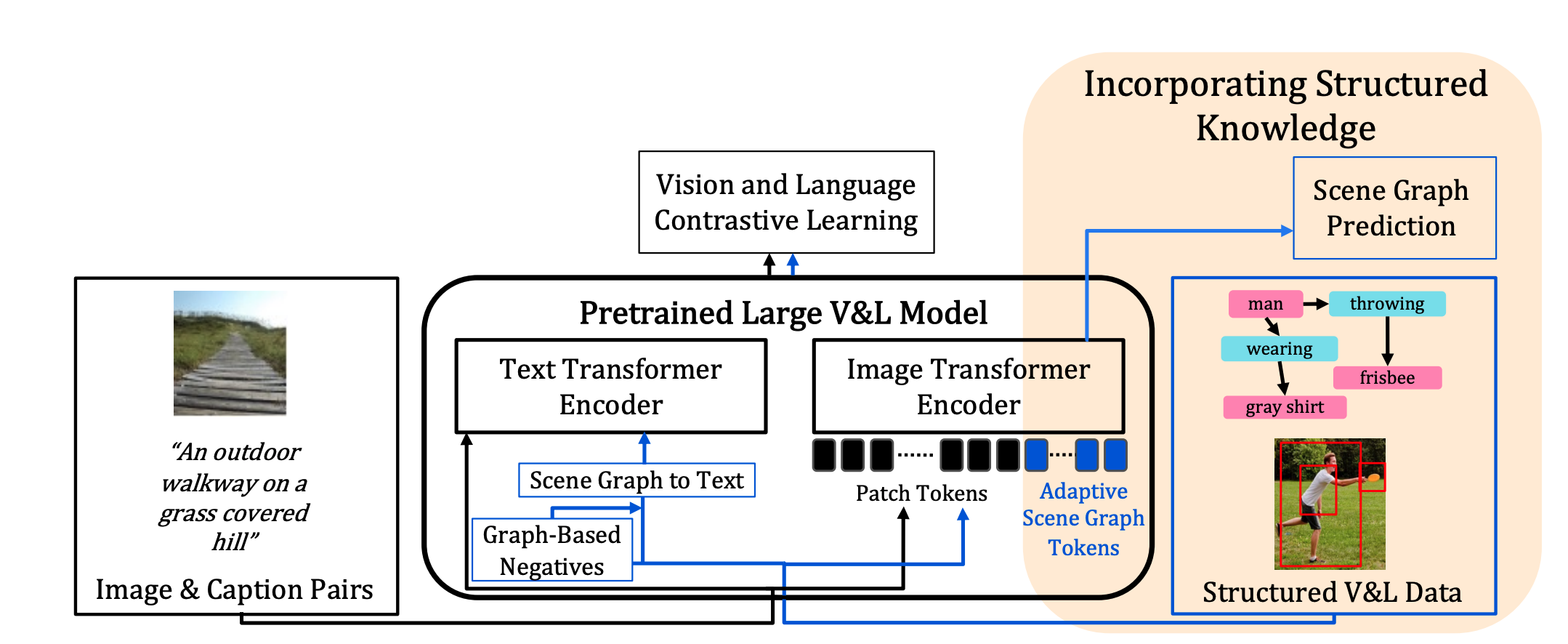

Roei Herzig*, Alon Mendelson*, Leonid Karlinsky, Assaf Arbelle, Rogerio Feris, Trevor Darrell, Amir Globerson Conference on Empirical Methods in Natural Language Processing (EMNLP) , 2023 project page / code / bibtex We propose to improve pretrained VLMs, which are usually trained on large-scale image-text pairs, by designing a specialized model architecture and a new training method that utilizes a small set of scene graph annotations from the Visual Genome dataset that are richer and reflect structured visual and textual information. |

|

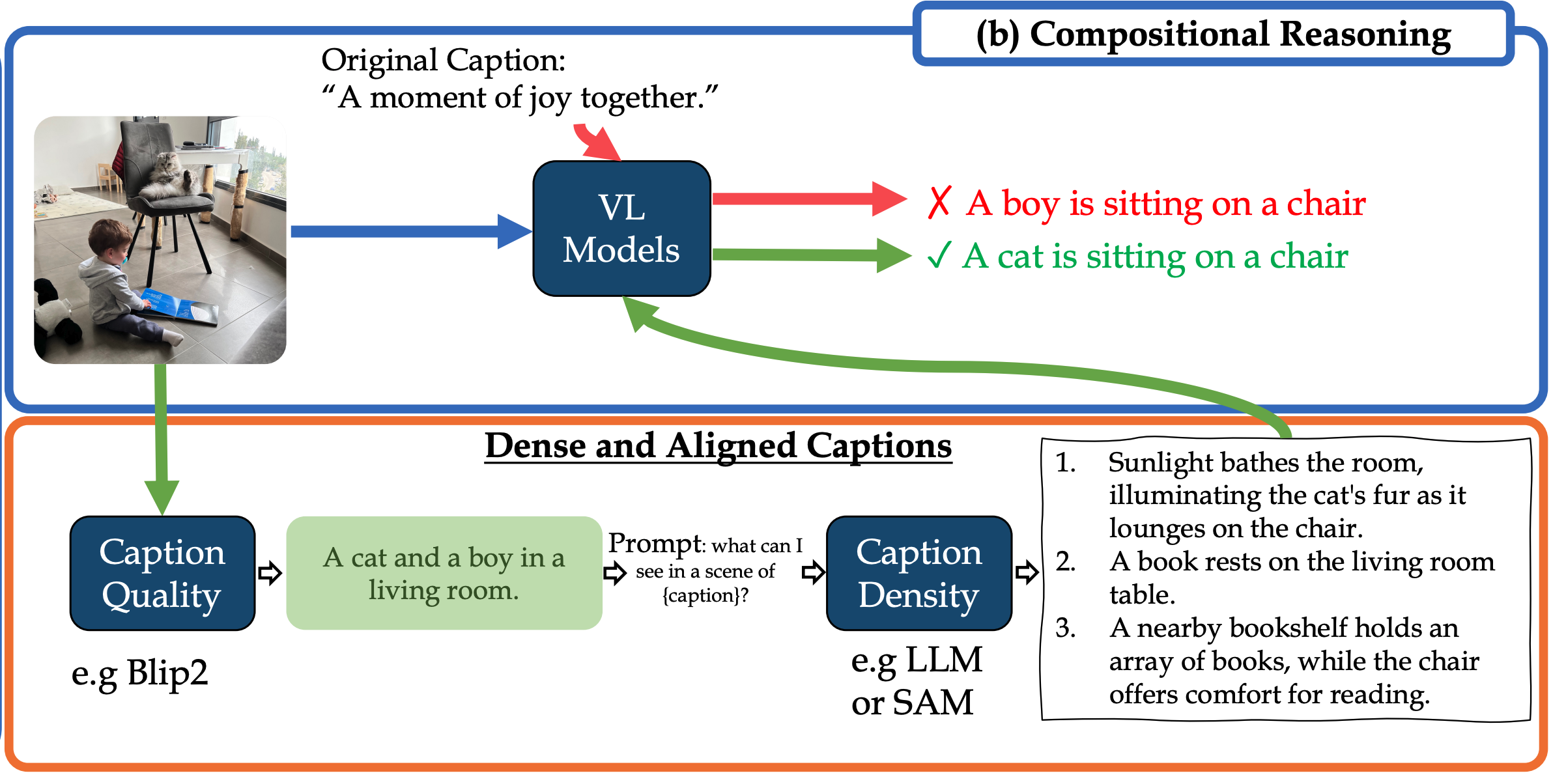

Sivan Doveh, Assaf Arbelle, Sivan Harary, Roei Herzig, Donghyun Kim, Paola Cascante-bonilla, Amit Alfassy, Rameswar Panda, Raja Giryes, Rogerio Feris, Shimon Ullman, Leonid Karlinsky Advanced in Neural Information Processing Systems (NeurIPS) , 2023 (Spotlight) project page / code / bibtex We propose a fine-tuning approach for automatically treating two factors limiting VL models’ compositional reasoning performance: (i) the caption quality, or in other words 'image alignment', of the texts; and (ii) the level of caption density, which refers to the number of details that appear in the image. |

|

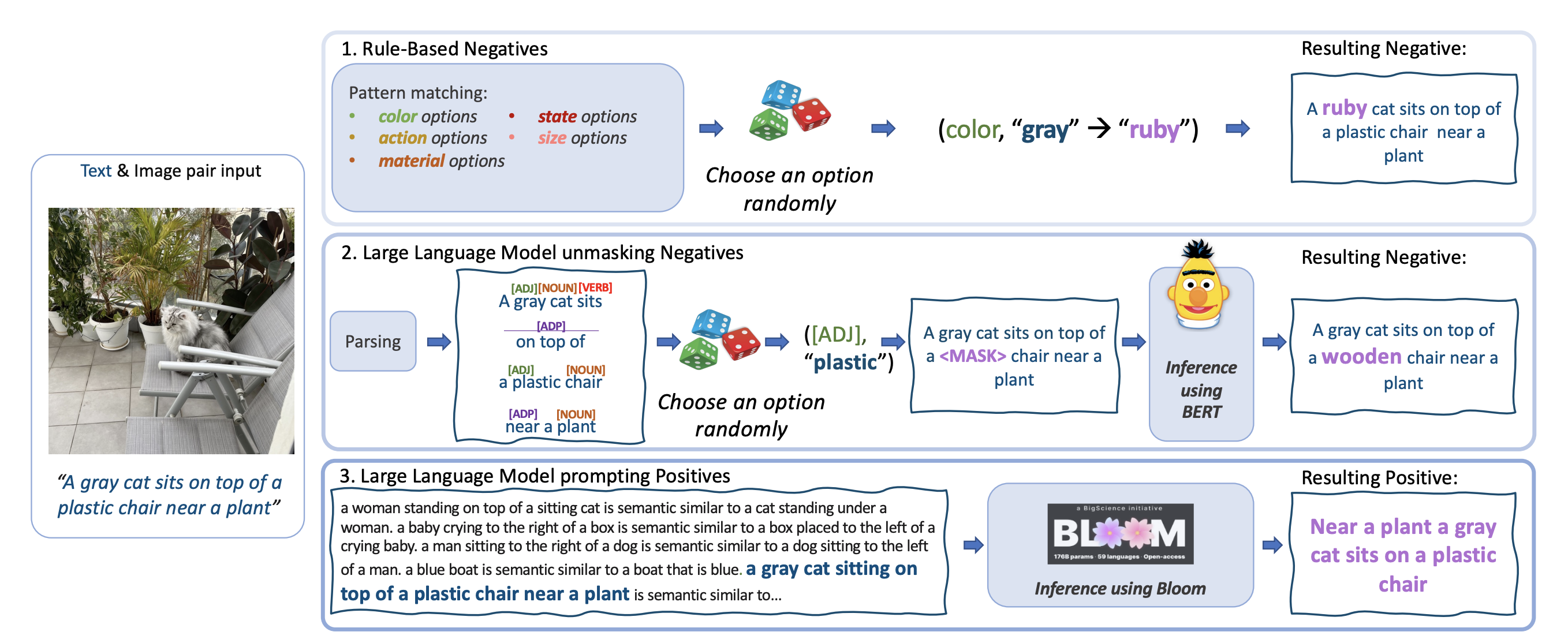

Sivan Doveh, Assaf Arbelle, Sivan Harary, Rameswar Panda, Roei Herzig, Eli Schwartz, Donghyun Kim, Raja Giryes, Rogerio Feris, Shimon Ullman, Leonid Karlinsky code / bibtex IEEE Conf. on Computer Vision and Pattern Recognition (CVPR) , 2023 We demonstrate language augmentation techniques for teaching language structure to VL models. |

|

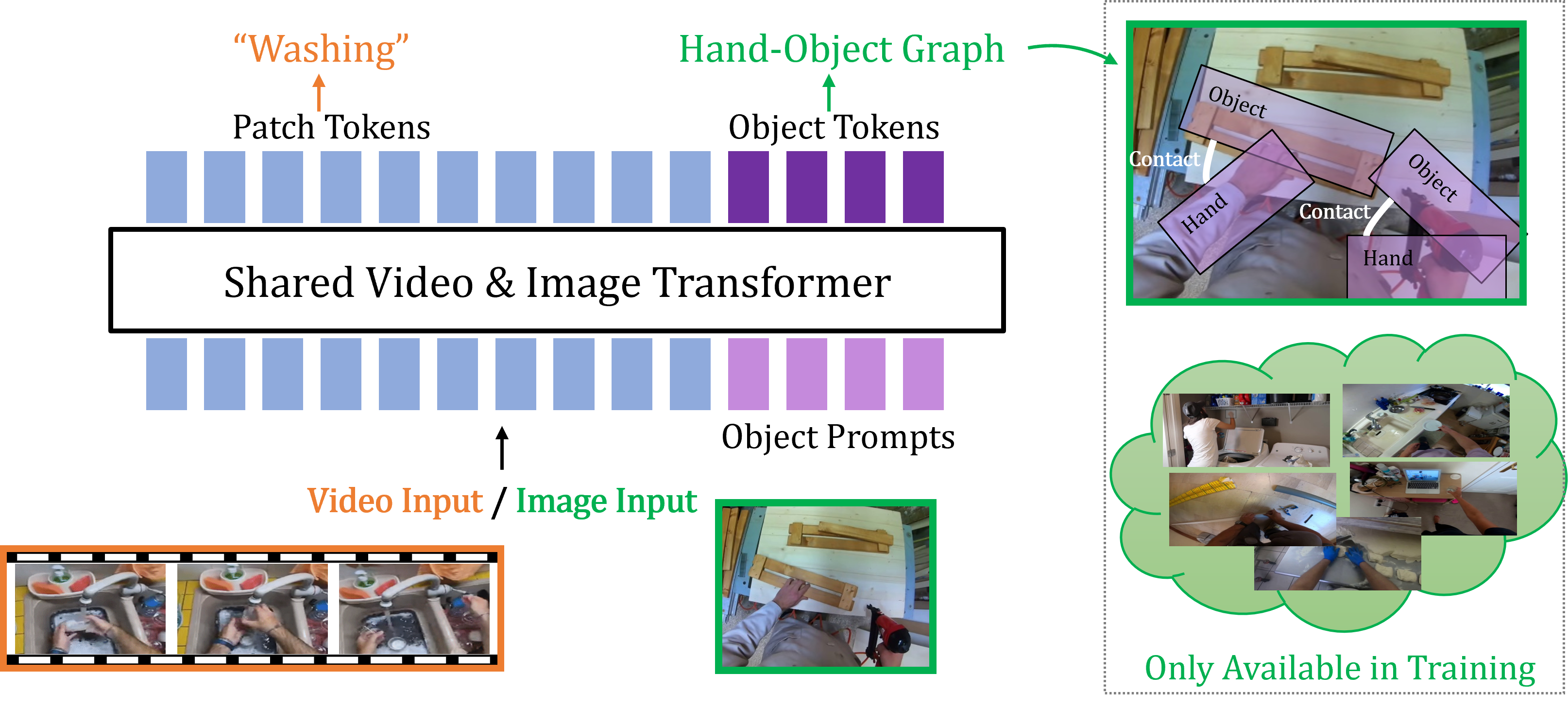

Elad Ben-Avraham, Roei Herzig, Karttikeya Mangalam, Amir Bar, Anna Rohrbach, Leonid Karlinsky, Trevor Darrell, Amir Globerson Advanced in Neural Information Processing Systems (NeurIPS) , 2022 Winner of the Ego4D CVPR'22 Point of No Return Temporal Localization Challenge , 2022 project page / code / bibtex We present SViT (for Structured Video Tokens), a model that utilizes the structure of a small set of images, whether they are within or outside the domain of interest, available only during training for a video downstream task. |

|

Roei Herzig, Elad Ben-Avraham, Karttikeya Mangalam, Amir Bar, Gal Chechik, Anna Rohrbach, Trevor Darrell, Amir Globerson IEEE Conf. on Computer Vision and Pattern Recognition (CVPR) , 2022 project page / code / bibtex We present ORViT, an object-centric approach that extends video transformer layers with a block that directly incorporates object representations. |

|

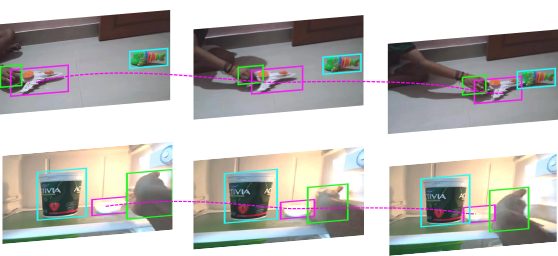

Amir Bar*, Roei Herzig*, Xiaolong Wang, Anna Rohrbach, Gal Chechik, Trevor Darrell, Amir Globerson International Conference on Machine Learning (ICML) , 2021 project page / code / slides / bibtex We introduce the formalism of Action Graphs, a natural and convenient structure representing the dynamics of actions between objects over time. We show we can synthesize goal-oriented videos on the CATER and Something Something datasets and generate novel compositions of unseen actions. |

|

Roei Herzig*, Amir Bar*, Huijuan Xu, Gal Chechik, Trevor Darrell, Amir Globerson Proceedings of the European Conference on Computer Vision (ECCV) , 2020 project page / code / bibtex We present a novel model that can inherently learn canonical graph representations and show better robustness to graph size, adversarial attacks, and semantic equivalent, thus generating superior images of complex visual scenes. |

|

Joanna Materzynska, Tete Xiao, Roei Herzig, Huijuan Xu*, Xiaolong Wang*, Trevor Darrell* IEEE Conf. on Computer Vision and Pattern Recognition (CVPR) , 2020 project page / code / dataset / bibtex We propose a novel compositional action recognition task where the training combinations of verbs and nouns do not overlap with the test set. We show the effectiveness of our approach on the proposed compositional task and a few-shot compositional setting which requires the model to generalize across both object appearance and action category. |

|

Roei Herzig*, Elad Levi* , Huijuan Xu*, Hang Gao, Eli Brosh, Xiaolong Wang, Amir Globerson , Trevor Darrell Workshop on Autonomous Driving at ICCV , 2019 (Oral) code / bibtex We propose a latent inter-object graph representation for activity recognition that explores the visual interaction between the objects in a self-supervised manner. |

|

Eran Goldman*, Roei Herzig*, Aviv Eisenschtat* , Jacob Goldberger, Tal Hassner IEEE Conf. on Computer Vision and Pattern Recognition (CVPR) , 2019 code / dataset / bibtex We collect a new SKU-110K dataset which takes detection challenges to unexplored territories, and propose a novel mechanism to learn deep overlap rates for each detection. |

|

Roei Herzig*, Moshiko Raboh* , Gal Chechik, Jonathan Berant, Amir Globerson Advanced in Neural Information Processing Systems (NeurIPS) , 2018 code / bibtex We propose a novel invariant graph network for mapping images to scene graphs using the permutation invariant property, which achieves a new state-of-the-art results on Visual Genome dataset. |

Students and Collaborators

If you are a student interested in collaborating on research projects, please reach out.

- Elad Ben-Avraham (2021-2022)--- MSc student at Tel-Aviv University; Now working with at Amazon.

- Alon Mendelson (2022-2023)---MSc student at Tel-Aviv University; Now working with at Opti.

- Chancharik Mitra (2023-Present)---Undergraduate at UC Berkeley; Now an MSc student in Deva Ramanan's lab at CMU.

- Brandon Huang (2023-Present)---Undergraduate & MSc student at UC Berkeley; Now Interning at MIT-IBM lab.

- Dantong Niu (2023-Present)---PhD student at UC Berkeley.

- Yida (David) Yin (2023-2025)---Undergraduate student at UC Berkeley; Now an incoming PhD student at Princeton.

- Yuvan Sharma (2023-Present)---Undergraduate student at UC Berkeley.

- Chuyi Shang (2023-Present)---Undergraduate student at UC Berkeley; Now MSc student at UC Berkeley.

- Amos You (2023-2024)---Undergraduate student at UC Berkeley; Now MSc student at UC Berkeley.

- Baifeng Shi (2024-Present)---PhD student at UC Berkeley.

- Tianning Ray Chai (2024-Present)---Undergraduate visiting student at UC Berkeley.

- Zekai Wang (2024-Present)---Undergraduate student at UC Berkeley.

- James Ni (2024-Present)---Undergraduate student at UC Berkeley.

- Anirudh Pai (2024-Present)---Undergraduate student at UC Berkeley; Now MSc student at UC Berkeley.

- Elvis Hsieh (2024-Present)---Undergraduate student at UC Berkeley.

- Mihir Rao (2025-Present)---Undergraduate student at UC Berkeley; Now interning at NVIDIA.

- Abrar Anwar (2025-Present)---PhD student at University of Southern California; Now interning at NVIDIA.

- Kelvin Li (2025-Present)---Undergraduate student at UC Berkeley.

Invited Talks

Partial list of invited talks and presentations.

- Structured Robotic Visual Intelligence (Spring 2024 BAIR Robotics Workshop), April 2025.

- Towards Compositionality in Large Multimodal Models (Fall 2023 BAIR Visual AI Workshop), December 2023.

- Towards Compositionality in Video Understanding (Israeli Vision Day), January 2023.

- NeurIPS 2022 Highlights (TAU fundamental of AI, Tel-Aviv University), December 2022.

- Towards Compositionality in Video Understanding (Vision and AI Seminar, Weizmann Institute of Science), December 2022.

- Towards Compositionality in Video Understanding (Israeli Association for AI Conference 2022), June 2022.

- ORViT: Object-Region Video Transformers (BAIR Visual Computing Workshop), March 2022.

- Towards Compositionality in Video Understanding (IMVC 2021), Oct 2021..

- Towards Compositionality in Video Understanding by Prof. Trevor Darrell (ICCV21 SRVU Workshop), Oct 2021.

- Compositional Video Synthesis with Action Graphs (Israel Vision Day), Dec 2020.

- Learning Canonical Representations for Scene Graph to Image Generation (BAIR Fall Seminar), Aug 2020.

- Compositional Video Synthesis with Action Graphs (Israeli Geometric Deep Learning), Aug 2020. Slides.

- Structured Semantic Understanding for Videos and Images (Computer Graphics Seminar at TAU), Jun 2020. Slides.